The perils of constant feedback

When to accept criticism and when to ignore it

My friend is a product manager at a popular consumer tech company, and he was lamenting the rise of metrics-driven development.

“If we send a single push notification, 7% of people open the app,” he said. “But if we send two push notifications, five minutes apart, 12% of those people open the app.”

Intuitively we can sense that sending two, or ten, push notifications in five minutes is—long term—probably a bad idea. This is how we got follow-up emails, clickbait articles, and Satan’s own exit pop-ups.

But this overoptimization is part of a larger, more pernicious trend: the rise of ubiquitous, high-fidelity feedback.

Instagram gives you instant feedback on your life; Twitter gives you instant feedback on your thoughts; exit polls give politicians instant feedback on their positions; focus groups give Hollywood instant feedback on their movies.

And now we have Tay the racist Twitter bot, the Marvel cinematic universe, and Donald Trump.

But clearly we need some feedback. I’m not yearning for the days of waterfall software development. So what’s the right amount of feedback?

I am by no means a machine learning expert. But there’s a metaphor in machine learning that I really like, and I’m going to abuse it a bit to make a point.

Imagine you’re at the top of a mountain. You’re trying to hike to the bottom of the mountain, but unfortunately you’re surrounded by a dense fog.

Luckily you have a special instrument—let’s call it a slope-finder—that tells you which direction is most downhill from your current position. So you hike a little bit, check the instrument, change course, and hike a bit more.

This is the famous analogy for gradient descent, a machine learning technique for (among other things) updating the weights of a neural network.

But it’s also a good analogy for problem solving in general: we move in the most promising direction, periodically updating that direction based on external feedback.

So our original question—How much feedback should we absorb?—becomes: How often should we check our slope-finder?

Picture Janet Yellen descending this foggy mountain. JY is pretty cautious, so she checks her slope-finder after every single step. It’s slow, it’s tedious, but she knows she’ll never overshoot her destination.

But now imagine Bear Grylls trying to get to the bottom of our foggy mountain. BG checks his slope-finder once and then sprints sixteen miles in that direction. When he finally checks his slope-finder again it points him 90-degrees left. He turns and sprints for another 16 miles.

BG, you can imagine, will die of exhaustion before he stops in the bottom of that valley. He will overshoot the bottom constantly, criss-crossing it like an idiot.

But JY also has a problem: she’s very likely to get stuck in the nearest hole. You can imagine her taking tiny little steps downhill, constantly following the slope-finder, right into the bottom of some dried-up lake.

Janet Yellen getting stuck in a hole

And so it is with feedback. If you ignore all feedback (like BG) and charge forward, immune to all criticism and advice, then you’ll probably never reach your goals.

If on the other hand you’re a slave to feedback (like our imaginary JY) you’ll move slowly and likely settle for a mediocre solution, because the best solution sometimes requires walking uphill.

So what are we to do?

The machine learning people have some ideas. What we’re calling feedback is inversely correlated with machine learning’s step size.1 The greater the step size, the less often you’re checking in for feedback.

Unfortunately there’s no right answer to choosing a step size: sometimes science is more art than science. It depends a lot on what your loss function—err, mountain range—looks like.

But what’s interesting is that often changing the step is the best strategy. From Wikipedia:

In order to achieve faster convergence, prevent oscillations and getting stuck in undesirable local minima the [step size] is often varied…[emphasis mine]

Again—I am not an expert—but here are a few ML strategies for varying the step size (aka feedback).

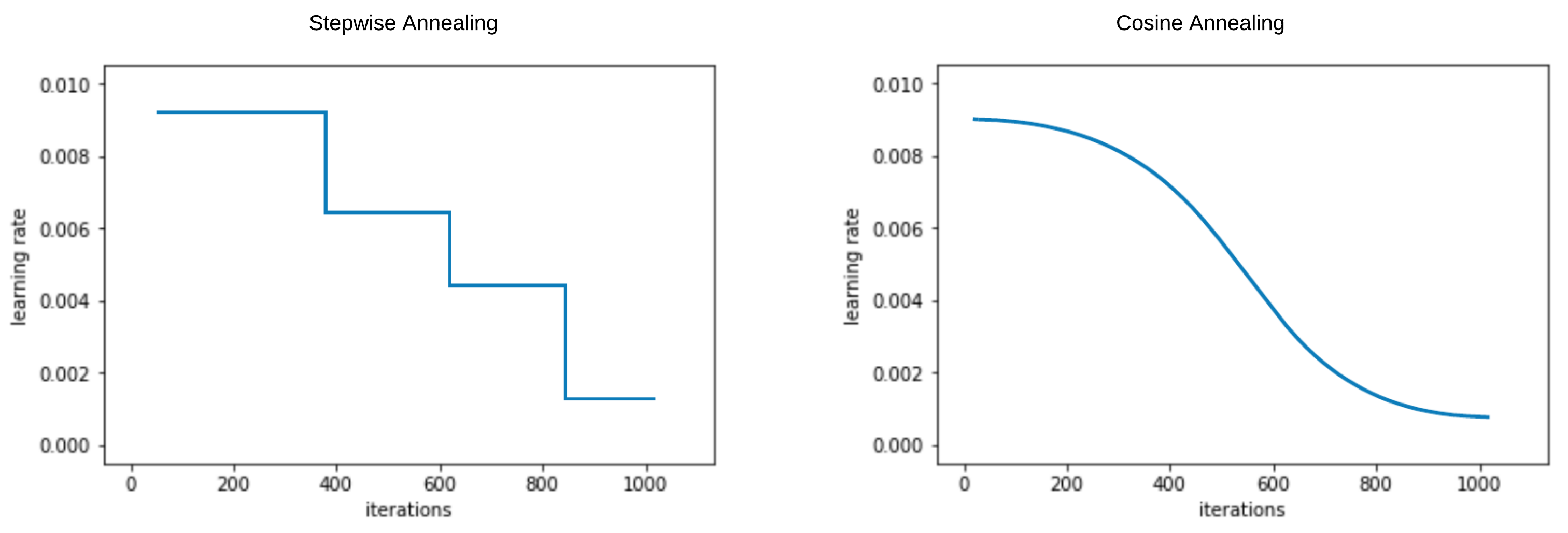

1. Gradually decrease it (annealing)

The idea here is to start with a relatively large step size and gradually decrease it over time. You take large steps at the beginning (less feedback), when you’re far away from your goal, but smaller and smaller steps (more feedback) as you zero-in on the optimal solution.

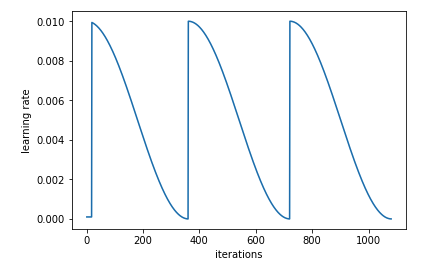

2. Cycle it between values (SGDR)

Stochastic gradient descent with restarts is a fancy way of saying “gradually decrease the step size over time, but occasionally jump back to a large step size.” The “restart” part helps you jump out of local minimums, and makes it more likely that you’ll finally settle on a globally optimal solution.

3. Give it momentum

This one is intuitively appealing. The learning rate increases when the error cost gradient heads in the same direction for a long time, allowing the “ball” to skip over local minima and to quickly traverse long downhills.

So, tying this all together, here’s what I’m taking away from this tortured metaphor:

- Too little feedback is bad (you’ll never find an optimal solution)

- Too much feedback is bad (you’ll move slowly and get stuck in local minima)

- It’s best to vary the amount of feedback (according to the above strategies)

There is a time for lots of feedback (when you’re close to your goal) but there’s also a time for charging ahead, Twitterverse be damned.

I think this is difficult for humans. It seems we’re either very sensitive to feedback (e.g. Sarah doesn’t like my haircut? Let me shave my whole head) or totally hardened to it (e.g. stubborn and arrogant). But gradient descent tells us that we need to occasionally take lots of feedback and occasionally ignore it.

“It is easy in the world to live after the world’s opinion; it is easy in solitude to live after our own; but the great man is he who in the midst of the crowd keeps with perfect sweetness the independence of solitude.”

- Ralph Waldo Emerson, Self-reliance

I worry that the deluge of instant, high-fidelity feedback is driving us toward local minima. Usually feedback encourages us to move down the path of least resistance, to make the easiest gains—but often the best gains are made by walking uphill for bit.

Imagine you’re one of these people who tweets a lot. You tweet about a lot of things—your pet turtle, your midnight snack, your controversial opinion about fiddle-leaf fig trees—but sometimes you tweet about Donald J. Trump.

And, lo and behold, you notice that your tweets about Trump get more likes than your other tweets. So—consciously or unconsciously—you start tweeting more and more about Trump, saying more and more outlandish things, until you wake up one day and discover you are a Trump shock jockey.

“Trump shock jockey” is, at best, a locally optimal solution. You could have many more followers, and a much better Twitter account, if instead of taking tiny steps at the behest of your current followers, you took big swings and found a more worthwhile subject matter.

“The young character, which cannot hold fast to righteousness, must be rescued from the mob; it is too easy to side with the majority."

- Seneca, Letters from a Stoic

-

More commonly known as learning rate, but step size is more consistent with the mountaineering analogy. ↩︎

Want to hear me talk about something besides burritos?